Explaining the generative a-Life, biologically inspired and Granular Synthesis processes configured by gestural interaction captured using web-cam [computer vision] and audio input [looming, loudness, roughness/noisiness] with Sam. Sam helped program the transformations of Neural Oscillator Network (NOSC) and GS grain density, size and distribution transformed by breathiness in the auditory analysis.

Explaining the generative a-Life, biologically inspired and Granular Synthesis processes configured by gestural interaction captured using web-cam [computer vision] and audio input [looming, loudness, roughness/noisiness] with Sam. Sam helped program the transformations of Neural Oscillator Network (NOSC) and GS grain density, size and distribution transformed by breathiness in the auditory analysis.Modular Hyper Instrument Design using Biologically Inspired Generative Computation for Real Time Gestural Interaction

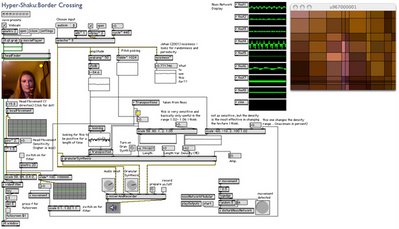

Contemporary computing enables calculation of complex generative algorithmic processes in real time. This facilitates the integration of biologically inspired generation into interactive interfaces requiring immediate and creative responses. Hyper instrument design is the augmentation of acoustic instrument performance. This chapter describes a model for enhancing traditional instrumental performance with responsive digital audio and visual display using artificial biological systems to generate the new artistic material meshed with the musical expression. The generative process is triggered and moderated by the gestural interaction of the human performer (sensed by motion captors, computer vision and computer hearing). The model is a scalable and modular system in which different generative processes can be interchanged to explore the effect of their interaction with each other and responsiveness to the performer. The modular approach allows customized interaction design at the sensing, generative computation and display stages for real time musical instrument augmentation. The purpose behind modular interaction engine construction is to allow customization for different performance scenarios and to produce a proliferation of design possibilities from a scalable and re-usable set of computation processes in Max/MSP + Jitter (graphical real time programming environment). Interchangeable sensing technologies and adjustable, iterative generative processes are amongst the parameters of individualization. This chapter describes the performance, mapping, transformation and representation phases of the model. These phases are articulated in an example composition, HyperShaku (Border-Crossing), an audio-visually augmented shakuhachi performance environment. The shakuhachi is a Japanese traditional end-blown bamboo Zen flute. Its 5 holes and simple construction require subtle and complex gestural movements to produce its diverse range of pitches, vibrato and pitch inflections, making it an ideal candidate for gesture capture. The environment uses computer vision, gesture sensors and computer listening to process and generate electronic music and visualization in real time response to the live performer. The integration of Looming and Neural Network Oscillator (NOSC) generative modules are implemented in this example.

Listen to example and watch video